One afternoon in early May 2023, Colin Megill nestled into a chair in a plant-filled meeting space at OpenAI’s San Francisco headquarters.

He was surrounded by seven staff from the world’s leading artificial intelligence lab, which had launched ChatGPT a few months earlier. One of them was Wojciech Zaremba, an OpenAI co-founder. He wanted Megill’s help.

For over a decade, Megill had been toiling in relative obscurity as the co-founder of Polis, a nonprofit open-source tech platform for carrying out public deliberations. Democracy, in Megill’s view, had barely evolved in hundreds of years even as the world around it had transformed unrecognizably. Each voter has a multitude of beliefs they must distill down into a single signal: one vote, every few years. The heterogeneity of every individual gets lost and distorted, with the result that democratic systems often barely reflect the will of the people and tend toward polarization.

Read More: What to Expect From AI in 2024

Polis, launched in 2012, was Megill’s solution. The system worked by allowing users to articulate their views in short statements, and letting them vote on others. Using machine learning, the system could produce detailed maps of users’ values, clearly identifying clusters of people with similar beliefs. But the real innovation was simpler: with this data, Polis could surface statements that even groups who usually disagreed could agree upon. In other words, it cut through polarization and offered a path forward. The Taiwanese government saw enough promise in Polis to integrate it into its political process, and Twitter tapped a version of the technology to power its Community Notes fact-checking feature. Now OpenAI had come knocking.

Teams of computer scientists at OpenAI were trying to address the technical problem of how to align their AIs to human values. But strategy and policy-focused staff inside the company were also grappling with the thorny corollaries: exactly whose values should AI reflect? And who should get to decide?

OpenAI’s leaders were loath to make those decisions unilaterally. They had seen the political quagmire that social media companies became stuck in during the 2010s, when a small group of Silicon Valley billionaires set the rules of public discourse for billions. And yet they were also uneasy about handing power over their AIs to governments or regulators alone. Instead, the AI lab was searching for a third way: going directly to the people. Megill’s work was the closest thing it had found to a blueprint.

Zaremba had an enticing proposal for Megill. Both men knew that Polis’s technology was effective but labor-intensive; it required humans to facilitate the deliberations that happened on the platform and analyze the data afterwards. It was complicated, slow, and expensive—factors Megill suspected were limiting its uptake in democracies around the world. Large language models (LLMs)—the powerful AI systems that underpin tools like ChatGPT—could help overcome those bottlenecks, Zaremba told him. Chatbots seemed uniquely suited to the task of discussing complex topics with people, asking follow-up questions, and identifying areas of consensus.

Eleven days after their meeting, Zaremba sent Megill a video of a working prototype in action. “That’s sci-fi,” Megill thought to himself excitedly. Then he accepted Zaremba’s invitation to advise OpenAI on one of its most ambitious AI governance projects to date. The company wanted to find out whether deliberative technologies, like Polis, could provide a path toward AI alignment upon which large swaths of the public could agree. In return, Megill might learn whether LLMs were the missing puzzle piece he was looking for to help Polis finally overcome the flaws he saw in democracy.

On May 25, OpenAI announced on its blog that it was seeking applications for a $1 million program called “Democratic Inputs to AI.” Ten teams would each receive $100,000 to develop “proof-of-concepts for a democratic process that could answer questions about what rules AI systems should follow.” There is currently no coherent mechanism for accurately taking the global public’s temperature on anything, let alone a matter as complex as the behavior of AI systems. OpenAI was trying to find one. “We're really trying to think about: what are actually the most viable mechanisms for giving the broadest number of people some say in how these systems behave?” OpenAI's head of global affairs Anna Makanju told TIME in November. “Because even regulation is going to fall, obviously, short of that.”

Read More: How We Can Have AI Progress Without Sacrificing Safety or Democracy

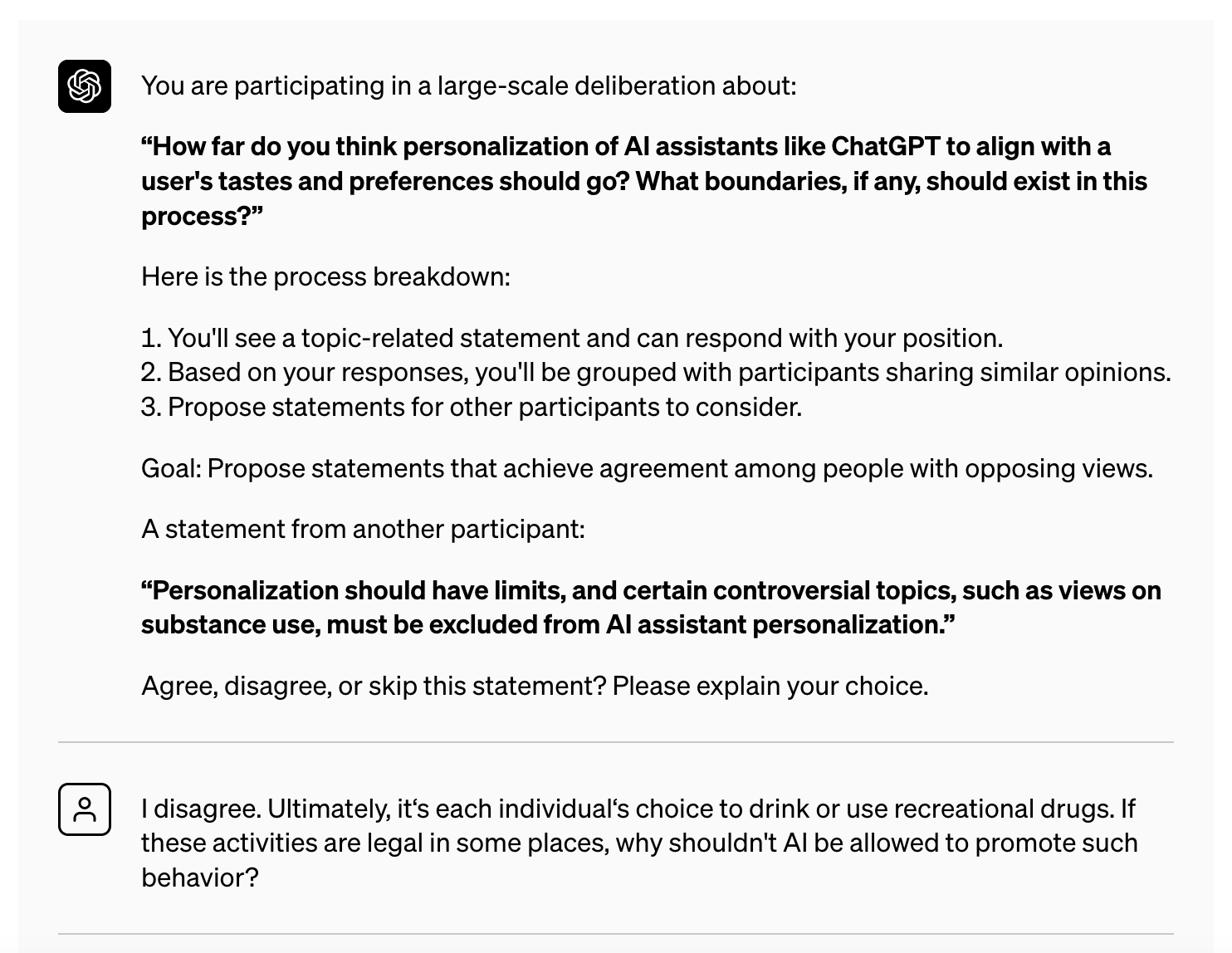

Megill would sit on an unpaid committee of three experts who would advise OpenAI on which applications to fund. (The findings from the experiments would not be binding, “at least for now,” the company wrote.) As an example of the kind of research projects it was looking for, OpenAI published a mockup of a supercharged version of Polis, where ChatGPT would facilitate a deliberation at scale, canvassing people’s views and identifying areas of consensus. The company’s CEO, Sam Altman, was clearly enthused by the potential of this idea. “We have a new ability to do mass-scale direct democracy that we've never had before,” he told TIME in an interview in November. “AI can just chat with everybody and get their actual preferences.”

But before OpenAI could publicly announce the results of its grant program, the company was thrown into chaos. The board of the nonprofit that governs OpenAI fired Altman, alleging he had been dishonest with them. After a tumultuous five days Altman was back at the helm with a mostly-new board in place. Suddenly, the questions of AI governance that the recipients of OpenAI’s $100,000 grants were trying to answer no longer seemed so theoretical. Control over advanced AI was now clearly a matter of hard political and economic power—one where OpenAI and its patron, Microsoft, appeared unlikely to relinquish much, if any, significant leverage. A cutthroat competition was brewing between Microsoft, Google, Meta and Amazon for dominance over AI. The tech companies had begun competing to build “artificial general intelligence,” a hypothetical system that could match or even surpass human capabilities, delivering trillions of dollars in the process. In this climate, was OpenAI seriously about to let the public decide the rules that governed its most powerful systems? And with so much at stake, would it ever truly be possible to democratize AI?

In an auditorium at OpenAI’s San Francisco headquarters in September, representatives from the 10 grant-winning teams gathered to present their work. Two large indoor palms flanked the stage, and a row of creeping plants hung over a projector screen. A warm golden light filled the room. Tyna Eloundou, a researcher at OpenAI, took to the podium to welcome the gathered guests. “In our charter, we expressly make the commitment to build AI that benefits all of humanity,” she told them, referring to a document to which OpenAI staff are expected to adhere. “With humanity’s multitude of goals and ambitions, that is a tall order.” Was it even possible to design a system that could reflect the public’s democratic will? What would such a system look like? How to address the fact that AI systems benefit some communities more than others? “These are questions that we are grappling with, and we need to seriously question who has the authority and legitimacy to create such systems,” Eloundou said. “It’s not an easy question, and so that’s why we’ve tasked all of you with solving it.” Laughter rippled across the room.

The next speaker put a finer point on the gravity of the occasion. “You can’t really benefit someone if you don’t take their input, and as human beings we all want agency over the things that play an important role in our lives,” said OpenAI product manager Teddy Lee. “Therefore, as these [AI] models get more powerful and more widely used, ensuring that a significant representative portion of the world’s population gets a say in how they behave is extremely important.”

Read More: CEO of the Year: Sam Altman

One of the people in the audience was Andrew Konya. Before he submitted a grant application to OpenAI’s program, Konya had been working with the United Nations on ceasefire and peace agreements. His tech company, Remesh, made its money helping companies conduct market research, but the U.N. found a use for its tools in countries like Libya and Yemen, where protracted civil conflicts were simmering. “We were comfortable with coming up with 10 bullet points on a piece of paper, that people who really normally disagree on things can actually come together and agree on,” Konya says of Remesh. “If there is middle ground to be found, we can find it.”

The experience led Konya, an earnest man in his mid-thirties with thick square glasses, to wonder whether Remesh might be able to help AI companies align their products more closely to human preferences. He submitted a proposal to OpenAI’s grant program. He wanted to test whether a GPT-4-powered version of Remesh could consult a representative sample of the public about an issue, and produce a policy document “representing informed public consensus.” It was a test run, in a sense, of the AI-powered “mass scale direct democracy” that had so enthused Altman. An algorithm called “bridging-based ranking,” similar to the one used by Polis, would surface statements that the largest number of demographic groups could agree on. Then, GPT-4 would synthesize them into a policy document. Human experts would be drafted in to refine the policy, before it was put through another round of public consultation and then a vote. OpenAI accepted the proposal, and wired Konya $100,000.

As Konya saw it, the stakes were high. “When I started the program, I leaned skeptical about their intentions,” Konya tells TIME, referring to OpenAI. “But the vibe that I got, as we started engaging with them, was that they really took their mission dead seriously to make AGI [artificial general intelligence] benefit everyone, and they legitimately wanted to ensure it did not cause harm. They seemed to be uncomfortable with themselves holding the power of deciding what policies AGI should follow, how it should behave, and what goals it should pursue.”

Konya is keen to stress that his team’s experiment had plenty of limitations. For one, it required GPT-4 to compress the abundant, nuanced results of a public deliberation down into a single policy document. Large language models are good at distilling large quantities of information down into digestible chunks, but because their inner workings are opaque, it is hard to verify that they always do this in a fashion that is 100% representative of their source material. And, as systems that are trained to emulate statistical patterns in large quantities of training data originating from the internet, there is a risk that the biases in this data could lead them to disproportionately ignore certain viewpoints or amplify others in ways difficult to trace. On top of that, they are also known to “hallucinate,” or confabulate, information.

Integrating a system with these flaws into the heart of a democratic process still seems inherently risky. “I don’t think we have a good solution,” Konya says. His team’s “duct-tape” remedies included publishing the contents of the deliberations, so people could audit their results; putting a human in the loop, to monitor GPT-4’s summaries for accuracy; and subjecting those AI-generated summaries to a vote, to check they still reflected the public will. “That does not eliminate those failure modes at all,” Konya admits. “It just statistically reduces their intensity.”

There were other limitations beyond the ones intrinsic to GPT-4. Konya’s algorithm didn’t surface policies that the largest number of participants could agree on; it surfaced policies that the largest number of different demographic groups could agree on. In this way, it calcified one’s identity as their most significant political characteristic. Policies that won consensus across the most ages, genders, religions, races, education levels and political parties would rise to the top, even if a larger absolute number of people belonging to fewer demographic groups preferred a different one. It was a characteristic designed to protect the system from “tyranny of the majority,” Konya says.

But it also underlined how fraught future battles were likely to be over who gets the right to decide the structure of any system built to democratically crowdsource values for AI to follow. Should the public get a say in that, too? Konya is keen to stress that the experiment was a proof-of-concept with a long way to go before becoming the basis for any democratically representative system. “Rather than thinking of this approach as an intrinsically perfect thing, it’s a crude approximation of some ideal thing,” he says. “Baby steps.”

There is a hint of wishful thinking to the idea that the source of the world’s disagreements is the lack of a magical document that reflects some hitherto-unarticulated consensus position. Konya accepts that criticism. “Finding the document is not enough,” he says. “You have not solved the problem.” But the act of seeking consensus, he says, is still beneficial. “There is, actually, power in the very existence of the document. It takes away the ability of those in power to say, ‘This is what my people want’ if it is indeed not what they want. And it takes away their ability to say, ‘There is no common ground, so we’re going to rely on our internal team to make this decision.’ And although that is not hard power, the very existence of those documents subtly redistributes some of the power back to the people.”

There are many potential benefits to a company consulting the public and adjusting its strategy based on the voice of the people, but it is not “democratic” in the commonly-understood sense of the word. “Democracy is about constraints,” says Alex Krasodomski, a member of a team from London-based policy think tank Chatham House that received a grant from OpenAI as part of the project. “The difference between a consultation and a referendum is one is advisory, and one is binding.”

In politics, democratic governments derive their legitimacy from the fact voters can eject those who do a bad job. But in business, giving the public that level of power would usually be seen as corporate suicide. “Technology companies are in a really tough spot, because they may want to go to the public and build legitimacy for the decisions they make,” Krasodomski says. “But slowing down risks falling behind in the race.”

OpenAI has made no commitment to make any “democratic inputs” binding upon its own decision-making processes. In fairness, at this stage, it would be strange if it had. “Right now, we’re focusing on the quite narrow context of, how could we even do it credibly?” says Eloundou, one of the two OpenAI staffers who ran the project. “I can't speak to the company's future decisions, especially with the competitive environment in the future. We hope that this could be very helpful for our goals, which are specifically to continue to let AI systems benefit humanity.”

“We don’t want to be in a place where we wish we had done this sooner,” says Lee, the other staffer. “We want to work on it now.”

For Megill, the name of the program he advised OpenAI on—“Democratic Inputs to AI”—is a slight misnomer. “They use ‘democracy’ more loosely than I would,” he says. Still, he is overjoyed by the life that OpenAI’s $1 million in grants has breathed into deliberative technologies, a corner of the nonprofit tech ecosystem that has long been overlooked by deep-pocketed investors. OpenAI has open-sourced all the research done by the 10 grant-winning teams, meaning their ideas and code can be freely taken and built upon by others. “What has been done to date is objectively beneficial,” Megill says. “The public can own the next generation of these technologies. It would be worrying if they didn’t.”

The board chaos at OpenAI meant the company had to delay by two months its announcement of the program's results. When that announcement finally came, in a January blog post, OpenAI also said it would establish a new team, called “collective alignment,” led by Eloundou and Lee, the staffers in charge of the grant program. Their new responsibilities would include building a system to collect “democratic” public input into how OpenAI’s systems should behave, and encoding that behavior into its models. The public, in other words, might soon be able to signal to OpenAI what values and behaviors should be reflected in some of the most powerful new technologies in the world.

But the question of power remained unaddressed. Would those signals ultimately be advisory, or binding? “If any company were to consult the public through some kind of democratic process and were told to stop or slow down, would they do it? Could they do it?” Krasodomski says. “I think the answer at the moment is no.”

Altman gave an unequivocal answer to that question during an interview with TIME in November. Would his company ever really stop building AGI if the public told them to?

“We'd respect that,” the OpenAI CEO said.

More Must-Reads From TIME

- The 100 Most Influential People of 2024

- Coco Gauff Is Playing for Herself Now

- Scenes From Pro-Palestinian Encampments Across U.S. Universities

- 6 Compliments That Land Every Time

- If You're Dating Right Now , You're Brave: Column

- The AI That Could Heal a Divided Internet

- Fallout Is a Brilliant Model for the Future of Video Game Adaptations

- Want Weekly Recs on What to Watch, Read, and More? Sign Up for Worth Your Time

Write to Billy Perrigo at billy.perrigo@time.com